The University’s Computer Based Testing Center will install updated software after a server failure in November left students scrambling to reschedule tests.

When the servers crashed in November, assistant director of computing for the Office of Assessment and Evaluation David O’Brien said it was a result of outdated software.

“The software should have been able to handle a null function without shutting down,” said testing lab manager Derek Wilson in November. “Once we rebooted the server, it kept doing the same thing and locking up. When we finally identified the problem, we had to rewrite the function.”

During winter break, the OAE began the updating process. The machines will run Perception version 5.4, as opposed to the previous version 3.4.

“With any software, there can be glitches,” O’Brien said. “The new version puts us in a lot better standing.”

On Sept. 16 — two months before the crash — the Student Technology Fee Oversight Committee granted the Office of Assessment and Evaluation’s request for about $190,000 to purchase a new version of its Perception software and a corresponding annual license.

But September wasn’t the first time the OAE requested funding from the Oversight Committee.

O’Brien said when computer testing became more commonplace almost a decade ago, student representatives were not always supportive. But the mentality has changed in recent years.

Graduate Council President and Oversight Committee member Thomas Rodgers said OAE funding was requested in spring 2013, but passing it would have put the committee in a deficit.

“We’re like the stewards of the funds,” Rodgers said. “We didn’t want to spend money we didn’t have.”

The OAE’s funding is now part of the yearly Information Technology Services proposal, and it will regularly request hardware and software updates.

“It’s not as up in the air,” Rodgers said.

Some students who were stuck in line the day the software crashed have mixed feelings about how their technology fees are being used.

“I don’t think the fee is a problem, they just need to use it in a timely manner,” said DaMika Woodard, athletic training freshman.

Others said they don’t like taking computer-based exams.

“The computer room isn’t that comfortable and it’s kind of intimidating,” said Virtuous Poullard, sports administration sophomore.

Regardless of personal preferences about test-taking, O’Brien said there is a rising demand for computer-based testing at the University.

“If every professor called me tomorrow and said they were done with computer testing, I wouldn’t need to ask for funding,” said O’Brien.

O’Brien said the OAE administered more than 100,000 exams last semester and was open for more than 700 hours of testing.

“We were down for four hours with the glitch,” O’Brien said. “That’s just over 0.5 percent of our hours, which I think is pretty phenomenal.”

Mishaps related to outdated software

By Renee Barrow

January 14, 2014

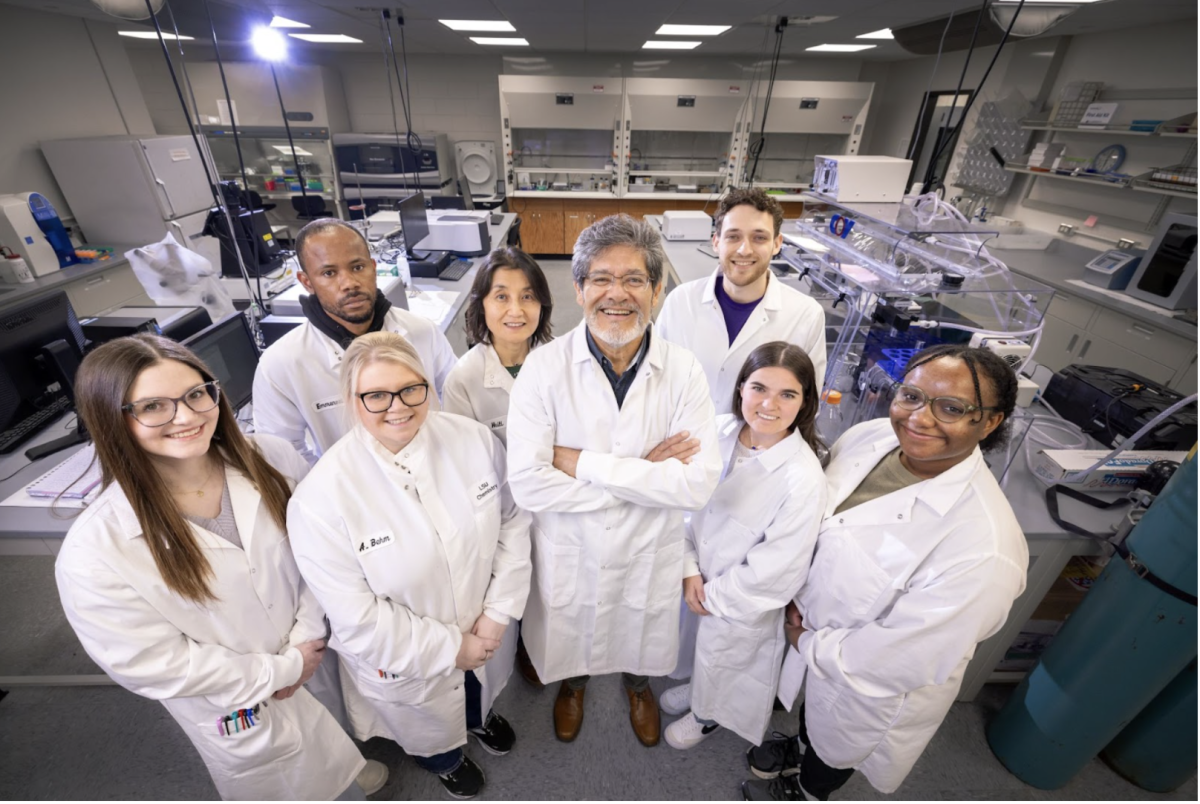

A line of students waiting to get into the Testing Center in Himes Hall stretches across the quad Tuesday, Nov. 19, 2013.

More to Discover